This post is a summary of some ideas for a lightweight semantic interoperability framework It is mainly a composition of existing open standards to form a framework for organisations to be able to ensure that semantic and technical descriptions stay connected over time. The idea is to provide a framework that allows for an increasing semantic interoperability emerging over time without having a large centralized organisation defining vocabularies. Main points:

- The benefits appear when a vocabulary is used.

- An important factor is how a vocabulary and its parts can be published, discovered and referenced.

- There needs to be one and only one vocabulary artifact.

- The vocabulary artifact must be machine processable to allow aggregation and automatic generation of other artifacts.

Background

The need for interoperability arise in scenarios with many contributing actors. In the old days this was solved by hiring a (large) consulting organization that (hopefully) had experience from glueing together many products from their vast product portfolio. Everyone involved was happy if it worked. Being able to swap out a part of this system or connect it to a third party that had a different technical platform was considered a risk.

Today more work is being done but in my experience there are still issues in keeping it all together. Domain experts create terminology in Word documents and architects draw UML class diagrams. They all talk about the domain but each type of artifact does not describe the domain sufficiently. In a different room developers merge domain concepts with coding practices to form software that may express fragments of a domain.

Little work is done to define vocabularies in a way that worked both for software developers and for domain experts. Typically you would find a Word document with a flat terminology that was ignored by the software developers, or, you could find class diagrams in UML or software documentation that was impossible for domain experts to understand. And when you look at the code or interfaces of the system there are no references to the correct item in a vocabulary.

How can we make them work together?

Requirements and challenges

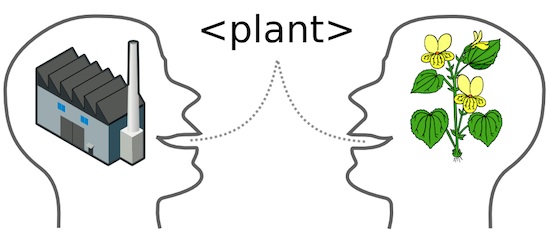

I like to think of semantic interoperability as a state where the same cognitive processes kicks in for two persons studying a specific artefact from the doma__in they are working in.

In order to work with this in a structured way we need to simplify it. One way to look at interoperability can be found in the draft of the European Interoperability Framework version 2. It introduces four interoperability levels. If we remove the European cross border services context they can be described in more general terms:

- Technical interoperability: issues involved in linking computer systems and services together (e.g. transport and serialization of data).

- Semantic interoperability: the meaning of information specified in a way understood by all parties (e.g. definitions, relations and structure of terms used to describe data).

- Organizational interoperability: coordination of processes in the context where data is used/transformed. (e.g. shared definitions of the roles, responsibilities and interactions of/between participants).

- Legal interoperability: shared interpretation and understanding of laws regulating information exchange and cooperation (e.g. can we transfer information about people? What about privacy?).

There are other models that could be used to discuss levels of interoperability (e.g. LCIM - Levels of Conceptual Interoperability Model) but let’s stick with the EIF 2 model above.

If you have a scenario with only two parties everything is simple. You could just do the minimum amount of work to make sure systems talk to each other and that people share a common image of the various levels of interoperability. Enabling more parties to participate requires more work though.

If you have a scenario with many parties (where some may not yet exist) you have to create a framework describing how interoperability should work by harmonizing a set of solutions at each level of interoperability. In a dictatorship this is easy. Just evaluate a few solutions, choose one and make everyone use it. In other contexts it is more complicated.

If we consider the EIF 2 model above as a set of requirements we should also make sure that the solutions we propose:

- scale down: If the parties (organizations, departments etc) vary greatly in size (as they will if the number is sufficiently big) some may consider that the solution you propose in a framework is unnecessary complex. They are right. In order to maximize the number of parties and processes that can participate in an interoperability scenario you will have to make sure the proposed solutions scale down.

- are technology neutral: Given a large number of organizations you will find almost every technology platform you can imagine. There will also be a number of ways to send and receive information. Some like to force a specific set of transport mechanisms as part of a interoperability framework but this will quickly put you on a path where you can’t make use of new standards and technologies. Forcing participating organizations to use a specific tool or platform will fail.

- give a reasonable amount of flexibility: This may require the framework to contain partly overlapping standards.

- facilitate an emerging semantic interoperability: The idea of having a central organization defining everything only works in a dictatorship. We should enable the decentralisation of participating in vocabulary work.

- are discoverable and referencable: If the artefacts that are created are difficult to find for a newcomer you can give up now. By lowering the threshold to participate more parties can be involved and more information can be reused.

This proposal is aimed at semantic interoperability only.

A lightweight semantic interoperability framework proposal

1. The Web Ontology Language (OWL) is used to express vocabularies

The primary reason for selecting OWL is not the features it has to express domain concepts but rather the possibilities of publishing it and referencing it on the web that come bundled with it. Using the web as a publishing platform enables us to make use of already existing standards for referencing a vocabulary and its parts.

Although plagued by research projects of poor usability and lack of longevity there are several tools to create vocabularies in OWL. The framework does not specify the process for creating a vocabulary as that may hinder small organizations form participating.

2. Vocabularies are published properly on the web

Putting an OWL vocabulary on the web means that it can easily be discovered, referenced and transformed. Not only will it help your own organization but it will lower the threshold for others who want to use parts of it in their own work. As soon as that happens you have the first step towards semantic interoperability.

By “published properly” I mean that you should choose your URI for the vocabulary wisely as it will be the reference string used in software interfaces and other artifacts down the road. Many get web publishing wrong by scrapping one of the best features: long term referenceability, as they dump information tightly coupled to the publishing tool they are using. When you change the tool (e.g. a content management system) you may break references for everyone else. Don’t do that.

To make the vocabulary human readable (nobody wants to read OWL/RDF-schema) generate at least an HTML-version of the vocabulary for users pointing their browser to the URL of the vocabulary and a class hierarchy overview to help users understand relations between things.

3. Use a standardized way of referencing vocabularies in other artifacts

Although OWL may seem to be only for semantic web scenarios it can be used in other areas. By standardizing how references from WSDL and XML schema are made it is possible to keep an unbroken chain of references. SAWSDL provides a mechanism to reference e.g. a class in the vocabulary. This makes it possible for developers to refer to the vocabulary in a consistent way from XML schemas and WSDL files and provides a way to keep the vocabulary closely connected to API implementations.

One important area missing is how to point to vocabularies from code. Code is a design artifact for a system and as such it is important that it is possible to keep an unbroken reference chain to it too. But we’ll leave that open for now.

4. Aggregate all vocabularies on a central website

Although vocabularies can and should be published under a URI in control by the authoring organization they should be aggregated on a central searchable website. This is easy as they are machine readable and will serve two purposes:

- It helps people discover other vocabularies before creating their own. This is an important step in creating an environment for an emerging semantic interoperability.

- The aggregated material will become a tool to find areas where harmonization efforts should be focused. By querying the aggregated information it is possible to find vocabularies that define similar things. Multiple classes called “Person”? Get going.

5. Express provenance in the vocabulary

Provenance provides a foundation for assessing authenticity and enabling trust. By standardizing information about provenance you also facilitate interaction between parties involved in the domain.

What do you think?

I believe this could be a lightweight approach to work with semantic interoperability in a way that scales (both up and down) and that provides for an emerging semantic interoperability over time. I guess it could work for government scenarios on country scale as well as for a small organization.

The only thing I miss is a standardized way of referencing a vocabulary from code.

Please leave your feedback.